Stupidly Obscure Programming in a Troubled Time

Since obsessively underlining passages in a tattered copy of Goodbye to Berlin hasn't proven to be the uplifting diversion I was hoping for, I resolved to bash my head against some really complicated scala code that I'm not qualified to write and that nobody is asking for either.

So that this exercise in self-abuse could pass for a reasonable use of my time - but not too reasonable, since that would be a giveaway - I decided to link it to an existing, longstanding obsession of mine: paradigms and constructs for concurrency.

Warning of what's to come:

- Concurrent data retrieval in Haskell with

Haxland in Scala withFetch, observing thatHaxlbenefits from extensions to thedoconstruct that we don't have with Scala'sfor. - Tedious construction of a Scala macro that purports to extend

forin the same fashion. Many sad lessons are learned along the way. - Demonstration of success in this endeavor, with some rueful acknowledgments.

- An unexpected rant about programming that uses types with names from category theory in combination with blocks of expressions involving left-arrows.

Waiting for guff

A couple of years ago, I offered to the the ungrateful internet a numbing fugue of mumblings that purported to explain how parallelism and batching were achieved in the Haxl package for Haskell. For no obvious reason, nearly all my code was in Clojure, which made no sense in the context of Haskell, but paid some dividends in the end, because the modern[ish] miracle of homoiconicity, plus a nice interface to quasar fibers, facilitated an alternate implementation based on asynchronicity and no monadic guff whatsoever.

Two years is a long time to wait for monadic guff, but good things - well, things anyway, come to those who wait.

Buried within the verbiage1 of the my previous post were a few pieces of information that will be useful today.

First, the Haxl is a Monad, allowing data retrieval operations to be composed

in do notation. In this contrived example, we retrieve the BFF of each member of

some grp, and then we produce a list of the full names of each of the BFFs.

runHaxl env $ do

ids <- getIds grp

mapM getBffName

where

getBffName id = do

bffId <- getBff id

fn <- getFirstName bffId

ln <- getLastName bffId

fn ++ " " ++ ln

The cool part is that, assuming proper implementations of getBff, getFirstName

and getLastName, the queries to assemble this information will be batched into

two queries that

- retrieve the

bffIds for everyone in the group - retrieve the first and last names for all the

bffIds so obtained.

It does this by not performing the queries as soon as they're made, but (loosely) by building up a tree of which queries depend on the results of which other ones, accumulating as many queries as it can do without those unknown dependencies and executing them in a batch, then repeating, using the all available information based on previous queries.

There's a bit of special magic necessary to thwart the usual ordering described

in nested fmap and >>=. In fact, the Haskell compiler has been

rejiggered to take advantage

of the fact that

Haxlis an applicative functor- Neither the

getLastNamenorgetFirstNamecall depends on the other

and automatically translate the 2nd do block to

getBffName id = do

bffId <- getBff id

(fn,ln) <- (,) $ (getFirstName bffId) <*> (getLastname bffId)

fn ++ " " ++ ln

where fn and ln are now retrieved simultaneously.

Without this magic, there would have been three queries, one to get the bffIds,

and then one each to get the first and last names. With the magic, the last two

queries are combined. So magic gets us from 9:3 to 9:2.

Go fetch

In Scala, there's a library similar to Haxl, called Fetch,

which (again, assuming that proper data sources have been written), can

batch together what look like queries for individual items. Just accept for now

that the various getXXX methods return Fetch instances.

getIds(grp).traverse {id =>

for(bffId <- getBff(id)

fn <- getFirstName(bffId)

ln <- getLastName bffId)

yield s"$fn $ln"

}

Unfortunately, there is no special applicative magic in Scala's for

comprehension, so if we wanted to get the job done in two batches, we'd

have to do the applicative transform ourselves...

Sort of. Scala is a language that, depending on your perspective, either

provides sophisticated support for multi-argument functions, or fails

to recognize that multi-argument functions are actually curried

single-argument functions, so "applicative" in Scala is sort of a

qualitative equivalent: Not support per se for a <*> combinator

def starcyclops[F[_], A, B](f: F[A => B]): F[A] => F[B]

but rather support for what you would want to accomplish by chaining such

things together, that is, apply a function of N parameters to N arguments

of type F[_], e.g.

def mapN[F[_], T1, ... Tn, R](f: (T1,T2 ... Tn) => R))

(F[T1],F[T2] ... F[Tn]): F[R]

In addition to the mapN, there are various syntactical conveniences. For

example, it's very common to want mapN(TupleN.apply _)(f1, f2, ... fN), so

CATS provides syntactic sugar to express this as (f1, f2, ... fN).tupled.

Thus, the "applicative transform" that we must perform manually thus looks like this:

getIds(grp).traverse {id =>

for(bffId <- getBff(id)

(fn,ln) <- (getFirstName(bffId), getLastName bffId).tupled

yield s"$fn $ln"

}

In the next section, we'll try to verify that Fetch actually delivers the expected concurrency.

Quick demonstration of concurrency with Fetch

Closely following the examples in the fetch documentation, I wrote some fake data sources that pretend to divine best-friends-for-life and names, but actually just look them up in a static dictionary. All data sources extend a trait defined in the Fetch library,

trait DataSource[I, A] {

def name: String

def fetch[F[_] : ConcurrentEffect : Par](id: I): F[Option[A]]

def batch[F[_] : ConcurrentEffect : Par](ids: NonEmptyList[I]): F[Map[I, A]]

}

where the fetch method fetches one value, and batch batches up several at once. Note that,

while the trait is defined in the Fetch library, it nowhere refers to other Fetch

types. The implicit ConcurrentEffect is just something with a runCancelable method,

which does what it sounds like it does, and Par has a parallel method, whose

return value gives us access to various Applicative methods. So what we're doing

is guaranteeing that fetch and batch will be used with constructs that can

be run in parallel. Those classes come from the Cats

library, rather than Fetch.

The boilerplate for my fake data sources can be condensed into a sub-trait,

trait DictSource[A,B] extends DataSource[A,B] {

protected val dict: Map[A,B]

final override def fetch[F[_] : ConcurrentEffect : Par](a: A) = logReturn(dict.get(a), s"One $name")

final override def batch[F[_] : ConcurrentEffect : Par](as: NonEmptyList[A]) =

logReturn(dict.filterKeys(as.toList.contains), s"${as.size} x $name")

private def logReturn[F[_] : ConcurrentEffect : Par, A](result: A, msg: String) = {

val id = Thread.currentThread.getId

Sync[F].delay(println(s"Requesting [tid=$id] $msg")) >>

Sync[F].delay(Thread.sleep(100)) >>

Sync[F].delay(println(s"Receiving [tid=$id] $msg")) >>

Sync[F].pure(result)

}

}

that looks for results in its dictionary, and, conveniently, documents the

data requests on stdout so we can see what's happening and when. Other

than thinking up some amusing names, implementing the three data sources

is trivial.

type PersonId = Int

type LastName = String

type FirstName = String

type BFF = PersonId

implicit object BFFSource extends DictSource[PersonId, BFF] {

override val name = "Best Friends Forever"

override val dict = Map(1 → 2, 2 → 3, 3 → 1)

}

implicit object FirstNameSource extends DictSource[PersonId, FirstName] {

override val name = "First Name"

override val dict = Map(1 → "Biff", 2 → "Heinz", 3 → "Tree")

}

implicit object LastNameSource extends DictSource[PersonId, LastName] {

override val name = "Last Name"

override val dict = Map(1 → "Loman", 2 → "Doofenshmirtz", 3 → "Trunks")

}

At this point, something actually called "Fetch" enters the picture,

via the following methods that will produce

Fetch instances that use the defined data sources, given a particular

concurrency construct F.

def getBFF[F[_]: ConcurrentEffect : Par](id: PersonId): Fetch[F,BFF] =

Fetch(id, BFFSource)

def getFirstName[F[_]: ConcurrentEffect : Par](id: PersonId) = [F, FirstName] =

Fetch(id, FirstNameSource)

def getLastName[F[_]: ConcurrentEffect : Par](id: PersonId): Fetch[F, LastName] =

Fetch(id, LastNameSource)

Three concerns are nicely separated and can be implemented independently:

- The

DataSource, which in real life would actually go to some database and retrieve information, singly or in batches. - The

ConcurrentEffect/Parconstruct for running the data retrieval routines. - The

Fetchlogic to gather up queries to be run in batches.

This decoupling comes at the cost of quite a bit of type complexity, however.

In an earlier

version of the library, Fetch had one type parameter - specifying the type of thing

being fetched, and, since the properties of Fetch did not depend on the F

we didn't need to carry around the implicit guarantees that F has ConcurrentEffect

and Par instances. (Presumably that ugliness will disappear with implicit function

types in Scala 3.)

Now we'll assemble a query to get the full name of the BFF of one person:

def getBFF[F[_]: ConcurrentEffect : Par](id: PersonId): Fetch[F, String] = for {

bff ← getBFF(id)

fn ← getFirstName(bff)

ln ← getLastName(bff)

} yield s"$fn $ln"

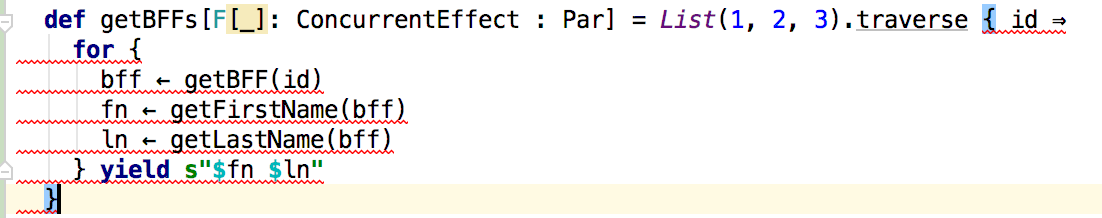

and another that traverse this to get three BFFs,

def getBFFs[F[_]: ConcurrentEffect : Par]: Fetch[F,List[String]] = List(1, 2, 3).traverse(getBFF[F])

run the query,

println(Fetch.run[IO](getBFFs).unsafeRunSync())

and see what kind of parallelism we get, as indicated by the printlns in

the contrived logReturn method:

First try:

Requesting [tid=15] 3 x BFF Receiving [tid=15] 3 x BFF Requesting [tid=13] 3 x Last Name Receiving [tid=13] 3 x Last Name Requesting [tid=14] 3 x First Name Receiving [tid=14] 3 x First Name List(Heinz Doofenshmirtz, Tree Trunks, Biff Loman)

This is about as expected - though it is a bit sad that none of the BFFships are mutual. While we logically queried for 9 items - fully interleaved as requests for BFF, LastName, FirstName, BFF, LastName, FirstName, etc. - our queries ran in only 3 batches, with like queries grouped together. Also, as expected, no advantage was taken of the fact that the two name queries were theoretically independent.

If we manually parallelize the two independent name queries:

def getBFF2[F[_]: ConcurrentEffect : Par](id: PersonId): Fetch[F, String] = for {

bff ← getBFF(id)

(fn,ln) ← (getFirstName(bff), getLastName(bff)).tupled

} yield s"$fn $ln"

we see a subtly different execution:

Requesting [tid=15] 3 x BFF Receiving [tid=15] 3 x BFF Requesting [tid=13] 3 x Last Name Requesting [tid=14] 3 x First Name Receiving [tid=13] 3 x Last Name Receiving [tid=14] 3 x First Name List(Heinz Doofenshmirtz, Tree Trunks, Biff Loman)

Note how the grouping has changed. Instead of fully interleaved Requesting/Receiving messages, both first and last name requests go out simultaneously. We get the desired reduction from 9 batches down to two.

But I don't want to do anything manually

Why should important people like us be forced to transform our code manually?

Less frivolously, since the whole purpose libraries like Haxl and Fetch is

to detect operations that could occur concurrently and batch them automatically,

it's annoying to have to keep track of the limits to their detection abilities.

It would be cool if Scala's for could do something similar to Haskell's do,

detecting when the monads are in fact parallelizable and independent, and

tupling them together.

One reason this is difficult to accomplish in Scala is that at the time that

for desugaring occurs, the compiler has not yet performed type resolution,

so it has no way of knowing whether the rhs of the left-arrow has applicative

powers: for comprehensions simply get textually converted into nested flatMap and map

calls, e.g.

for(i <- Some(1); j <- Some(2); k <- Some(3)) yield i+j+k'

becomes

Some(1).flatMap(((i) => Some(2).flatMap(((j) => Some(3).map(((k) => i.$plus(j).$plus(k)))))))

irrespective of the abilities of the Option class. In fact, we could stick in

arbitrary monad poseurs, and the parser couldn't care less.

for(i <- Harry(1); j <- Dick(2); k <- Jane(3)) yield i+j+k'

Harry(1).flatMap(((i) => Dick(2).flatMap(((j) => Jane(3).map(((k) => i.$plus(j).$plus(k)))))))

Obviously the compiler will eventually complain, just not yet.

If we want to get messy with compiler internals and fetch (j,k) at once, we're going

to have to do so after the typer has run, which means we'll be working with with

code that has already been converted into nested maps.

Writing a macro to do this will be awkward,

but not impossible, especially if set our sights relatively low.

For example, I'll assume the simplest possible for constructs - no guards, no =

assignments. Additionally, the grouping will only work for independent terms that happen

to be adjacent; there will be no re-ordering of the code.

Also, I'm going to keep testing to an absolute minimum, guaranteeing that the macro will work under practically no other circumstances, as is fitting, given that the whole purpose of this work is self-abuse.

Under the Macroscope

Scala macros are fun and easy, in the sense that it's incredibly easy to make obscure mistakes and fun to laugh at yourself afterwards for making them. Also, they're difficult to debug, so when you do manage to fix a problem, you feel very proud of yourself.

The goal is to be able to write

def getBFF[F[_]: ConcurrentEffect : Par](id: PersonId): Fetch[F, String] =

LiftFetchTuples {

for {

bff ← getBFF(id)

fn ← getFirstName(bff)

ln ← getLastName(bff)

} yield s"$fn $ln"

}

where LiftFetchTuples.apply is actually a macro that teases apart the map-nest

passed to it as an argument, and converts it into the version with

(fn,ln) ← (getFirstName(bff), getLastName(bff)).tupled in it.

I'm going to omit the most boring details (only the most boring details) , so if you want those, take a look at the actual code on github.

We start with the standard macro boilerplate,

def apply[M[_], T](expr: Fetch[M,T])

(implicit semigroupal: Semigroupal[Fetch[M, ?]],

invariant: Invariant[Fetch[M,?]]): Fetch[M,T] =

macro liftTuplesImpl[M, T]

made more complicated than usual by the presence of implicit parameters. We want

a guarantee before the macro is even invoked that

the Fetch being passed to us is amenable to fancy tupling, which

essentially means that there's a Semigroupal typeclass for it. You might wonder why

we need guarantees beyond knowing that the expression is in fact a Fetch: It turns

out that Fetch isn't always semigroupal, just as it doesn't always have

a ConcurrentEffect - the implicit conversions that make it so

depend on implicits of the specific type parameters.

As is the custom in these parts, heavy lifting occurs in a method ending in Impl.

It's not required to have a silly ending that rhymes with "pimple," but if you give your

macro a nice name, then other people will be jealous.

def liftTuplesImpl[M[_], T](c: Context)(expr: c.Tree)( semigroupal: c.Tree, invariant: c.Tree) = ???

Notice that the keyword implicit no longer shows up. The evidence expressions are passed

to us as Abstract Syntax Trees, just like any other argument.

Overlapping recursion

The expression tree we're passed represents nested application of map and flatMap. Aggressively cleaned up, the abstract syntax tree will look like

Apply(TypeApply(Select(vx, flatMapName, typeParams),

Function(args, PossiblyNestedMap))

where

Apply(fun, args)representsfun(args)TypeApply(fun, tparams)representsfun[tparams]Function(arg :: Nil, body)representsarg => bodyPossiblyNestedMaprepresents the entire expression, recursively.

The first 3 are predefined members of Trees. We'll have to write an extractor

for the last.

The quasiquote string context allows one to express the AST more like the code itself, but it has some limitations, but I couldn't figure out how to use it for recursive extraction. I was able to use it for tree construction in places, as you'll see below.

Here's a full-blown extractor that looks for such patterns, which I'll annotate

line by line. The unapply method

matches application of vx.flatMap[T]

object PossiblyNestedMap {

def unapply(tree: Tree): Option[Tree] = tree match {

case Apply(TypeApply(Select(vx, flatMapName), outerMethTypeParam),

to a closure

Function(vArgDef :: Nil,

whose body is another PosiblyNestedMap - that is, unapply will be called

recursively on the body,

PossiblyNestedMap(

and we pick apart whatever got returned from that recursive call

into the inner wx.mapOrFlatMap[T],

Apply(TypeApply(Select(wx, innerMeth), innerMethTypeParam),

applied to an inner closure whose expr body we for now don't care about

Function(wArgDef :: Nil, expr) :: Nil))) :: Nil)

but may itself have once been a PossiblyNestedMap - meaning our recursive call to unapply

would have called itself again. Note that the innerMeth may be a map or a flatMap

depending on where we are in the for expression.

Our basic plan is to re-express this as one (vx,wx).tupled.flatMap( ... )

and return the applicative expression from the extractor. Assuming we've

written the extractor, the main job of our macro will be to transform the tree recursively,

replacing every instance

of an appropriate PossiblyNestedMap with its applicative equivalent.

class TupleLiftingTransformer extends Transformer {

override def transform(tree: Tree): Tree = tree match {

case PossiblyNestedMap(xformed) ⇒ xformed

case _ ⇒ super.transform(tree)

}

}

(new TupleLiftingTransformer).transform(expr)

In our extractor, by the way, there'll be a second, more boring case, for an non-nested map application. We still need to transform it recursively in case there's something interesting lurking in sub-expressions, but that's all we have to do. Finally, there's the most common case, where no map of any sort is found.

case Apply(TypeApply(Select(wm, comb), _), Function(_ :: Nil, _) :: Nil) ⇒

Some(super.transform(tree))

case _ => None

Cats have claws

You might expect that vx and wx would represent expressions of type Fetch,

but you would be wrong. You see, Fetch doesn't actually have a flatMap

method, but can be converted implicitly into a FlatMap.Ops, which does.

If we examine vx in the debugger, we might (well, I did) see something like this

cats.syntax.`package`.all.toFlatMapOps[[A]Fetch[F,A], Author]

(... our getWhatever call ...)

(fetch.`package`.fetchM[F](evidence$47))

The implicit toFlatMapOps method requires evidence that its argument is in the FlatMap

typeclass. FlatMap is implemented by Monad, an instance of which the implicit

fetchM method can provide, as long as it has evidence that the F type parameter

is itself a Monad, which was in turn provided by the implicit parameter of

LiftFetchTuples.apply, to which scala assigned the symbol evidence$47.

So there's a hell of a lot of implicit evidence being called forth so that Fetch

can avoid implementing its own flatMap.

Our immediate problem is to retrieve the actual Fetch from the FlatMap.Ops

it was converted to.

Fortunately, these Ops classes all have a .self method that returns the

original object. So we'll assign

val vm = q"$vx.self"

val wm = q"$wx.self"

here using the convenient quasiquote string context.

Having extracted our get calls, we now need to make sure that they're independent;

i.e. the argument to vm's closure should not be used anywhere within wm. This

is as easy as searching for the v symbol in the w closure. If we find the

symbol, we give up, printout out an informative message and carrying on with

remaining transformation of the tree:

if(wm.find(vArgDef.symbol == _.symbol).isDefined)

c.info(vUsed.get.pos, s"Not lifting, because ${vValDef.symbol} is used on rhs", true)

Some(super.transform(tree))

}

If we pass this test, it should be fine to create a new tupled Fetch. Since

.tupled is just going to trigger another implicit search, it's cleaner to

call the tuple2 function it will end up calling, explicitly passing in its implicit

evidence, which we have from the arguments of our macro:

val newQual = q"_root_.cats.Semigroupal.tuple2($vm, $wm)($semigroupal, $invariant)"

When we flatMap over this new monad, it's closure will take a Tuple2[V,W],

where V and W were the types taken by the two original closures, Here, we extract

the types of the original closure arguments, create the new tuple type, and synthesize

a new argument with a guaranteed unique name:

val vt = oldClosure.vparams.head.tpt.tpe

val wt = oldInnerClosure.vparams.head.tpt.tpe

val tupleOfVWtt: Tree = tq"($vt,$wt)"

val vwArgName = internal.reificationSupport.freshTermName("x$")

val vwArgDef = ValDef(Modifiers(Flag.SYNTHETIC | Flag.PARAM), vwArgName, tupleOfVWtt, EmptyTree)

The body of the new closure still refers to the old v and w, which we must

populate with values from the tuple.

val newBody = Block(

c.internal.valDef(vValDef.symbol, q"$vwArgName._1"),

c.internal.valDef(wValDef.symbol, q"$vwArgName._2"),

transform(body)

val newClosure = Function(vwArgDef :: Nil, newBody)

Altogether, the new closure we build is going to look a bit like

x$123: (V, W) => {

val v = x$123._1

val w = x$123._2

originalBodyOfYieldExpression(v, w) // more or less

}

Finally putting together the full map/flatMap over the new tupled Fetch with the new

closure, (This is one of the places where quasiquotes annoyingly didn't work.)

val ret = Apply(TypeApply(Select(newQual, innerMeth), innerMethTypeParam), newClosure :: Nil)

OK, not finally

We're not really done. First we have to typecheck our new expression,

val rett = c.typecheck(ret)

and even after we do that, there's still a bit of a mess to clean up. One big pitfall

of scala macros is that, in addition to the implicit "ownership" of AST elements that are

components of other AST elements, every symbol we use has to be explicitly owned by another

symbol. For example, all vals declared directly in a function body have symbols

whose .owner is the symbol of the containing Function. The tree of symbol ownership

must exactly parallel the tree of AST elements. Even the slightest mistake will lead

to obscure errors during the delambdafy phase, much later in the compilation

Symbol ownership gets assigned

automatically for us during initial typing, but when we muck up the tree as I've just

done, correct ownership must sometimes be established manually. In this case, we want

to make sure

1. that the new closure has the same owner as the old outer closure, and

2. that the new v and w vals belong to the new closure, instead of

the old outer and inner closures respectively.

To change the owner of the new closure, we need first to get its symbol; as that symbol was assigned just now when we typechecked, we need to extract it from the typed expression:

val Apply(_, newClosureTyped :: Nil) = rett

c.internal.setOwner(newClosureTyped.symbol, oldClosure.symbol.owner)

Now we ensure that the the two old parameters belong to the new closure,

c.internal.changeOwner(rett, vValDef.symbol.owner, newClosureTyped.symbol)

c.internal.changeOwner(rett, wValDef.symbol.owner, newClosureTyped.symbol)

c.info(tree.pos, s"Lifting to $rett", true)

Some(rett)

It's alive!

The LiftFetchTuples'd code compiles, with a few new messages that the macro generated:

[info] /Users/pnf/dev/scala-playground/core/src/main/scala/fetchy/Fetchy.scala:202:25: Not lifting, because value bff is used on rhs

[info] fn ← getFirstName(bff)

[info] ^

[info] /Users/pnf/dev/scala-playground/core/src/main/scala/fetchy/Fetchy.scala:202:10: Lifting to cats.syntax.`package`.all.toFunctorOps[[A]fetch.Fetch[F,A], (fetchy.TestBFF.FirstName, fetchy.TestBFF.LastName)](cats.Semigroupal.tuple2[[A]fetch.Fetch[F,A], fetchy.TestBFF.FirstName, fetchy.TestBFF.LastName](cats.syntax.`package`.all.toFlatMapOps[[A]fetch.Fetch[F,A], fetchy.TestBFF.FirstName](TestBFF.this.getFirstName[F](bff)(evidence$54, evidence$55))(fetch.`package`.fetchM[F](evidence$54)).self, cats.syntax.`package`.all.toFunctorOps[[A]fetch.Fetch[F,A], fetchy.TestBFF.LastName](TestBFF.this.getLastName[F](bff)(evidence$54, evidence$55))(fetch.`package`.fetchM[F](evidence$54)).self)(fetch.`package`.fetchM[F](evidence$54), fetch.`package`.fetchM[F](evidence$54)))(fetch.`package`.fetchM[F](evidence$54)).map[String](((x$13: (fetchy.TestBFF.FirstName, fetchy.TestBFF.LastName)) => {

[info] val fn: fetchy.TestBFF.FirstName = x$13._1;

[info] val ln: fetchy.TestBFF.LastName = x$13._2;

[info] scala.StringContext.apply("", " ", "").s(fn, ln)

[info] }))

[info] fn ← getFirstName(bff)

The "Not lifting" message is straightforward: we need to know bff before we can

call getFirstName, so bff and fn cannot be tupled.

What we can initially tell from the second message and the daunting scroll bar is that we apparently generated a huge amount of code. The full insanity is more easily appreciated by adding some line-feeds and shortening a few FQCN prefixes. One thing that's clear immediately is that the vast majority of the code was actually inserted by scala implicit searches:

toFunctorOps[[A]Fetch[F,A], (FirstName, LastName)]

(

Semigroupal.tuple2[[A]Fetch[F,A], FirstName, LastName] // <-- LOOK HERE SECOND

(

toFlatMapOps[[A]Fetch[F,A], FirstName]

(

getFirstName[F](bff)(ev$54, ev$55) // <-- LOOK HERE FIRST

)

(fetchM[F](ev$54)).self,

toFunctorOps[[A]Fetch[F,A], LastName]

(

getLastName[F](bff)(ev$54, ev$55)

)

(fetchM[F](ev$54)).self

)

(

fetchM[F](ev$54), fetchM[F](ev$54)

)

)

(

fetch.`package`.fetchM[F](ev$54)

)

.map[String](((x$13: (FirstName, LastName)) => {

val fn: FirstName = x$13._1;

val ln: LastName = x$13._2;

scala.StringContext.apply("", " ", "").s(fn, ln)

}))

At the LOOK HERE FIRST comment, you can see the original

getFirstName[F](bff)(ev$54, ev$55), with its ConcurrentEffect and Par

evidence.

It was implicitly wrapped in a call to toFlatMapOps, which was

implicitly provided with monadic evidence (fetchM[F](ev$54)), which

itself relied on the ConcurrentEffect evidence. Now we undo that

wrapping with .self.

At LOOK HERE SECOND, you see the two Fetch queries passed to

Semigroupal.tuple2, the result of which must itself be wrapped

toFunctorOps in order to call .map on it.

At the bottom, you see the new combined closure, which takes a tuple

and extracts the original fn and ln to use in the string expression.

But the really exciting part is that, when we run it, we see the same batch grouping as if we had performed the tupling manually!

Requesting [tid=15] 3 x BFF Receiving [tid=15] 3 x BFF Requesting [tid=13] 3 x Last Name Requesting [tid=14] 3 x First Name Receiving [tid=13] 3 x Last Name Receiving [tid=14] 3 x First Name List(Heinz Doofenshmirtz, Tree Trunks, Biff Loman)

What have we learned today?

If you've made it this far, I hope you've learned that not all self-deprecation should be taken ironically. I warned you that this post constituted self-abuse, and that it would be stupidly obscure. Maybe you learned something about Fetch (having filtered out by ignorant misrepresentations), or maybe you are now more than ever convinced that you either do or do not want to write scala macros - self-knowledge that can be useful in life and work.

I haven't learned anything. I never learn. I did, however come away with a bit of a distaste for writing Haskell-style programs in Scala.

The way Cats implements typeclasses through implicit evidence and conversions

makes sense - or can be made sense of - but it seems really complicated. When

encountering a .map, the first assumption of many programmers will be that this

method was implemented in the class or some trait of its target. By this point,

we're all used to the standard typeclass pattern, where a Foo is wrapped with

an implicit class SpecialFooOps(foo: Foo), but the process can get

byzantine when, say Foo has a type parameter, Foo[T], and SpecialFooOps can only

be instantiated with implicit evidence of Bar[T], and the implicit def providing

that evidence in turn relies on some further evidence of a Wiz[T].

This would be less of a problem if IDEs were capable of following a long chain of implicit dependencies, but, at this point at least, IntelliJ is not, and the only reliable way I found to untangle the implicitness was to set break points in the debugger, then to paste the observed values into an editor and format them. What's worse, IntelliJ is as likely to fail to locate an implicit parameter - hurling jagged red lighting down upon screens of innocent source - as to smilingly approve the worst delinquencies, abandoning you to the tender mercies of scalac typer errors.

And for what?

These typing complications are, unfortunately, most evident when for constructs

are involved. For example, the reason I wrote,

def getBFFs[F[_]: ConcurrentEffect : Par] = List(1, 2, 3).traverse(getBFF[F])

breaking the for construct into its own method,

was not actually to emphasize composability (which I guess it sort of does),

but so I wouldn't have to look at this:

Clearly, one can't expect an IDE to intuit the arbitrary manipulations of my macro, but this example is practically straight out of Fetch documentation.

I maintain that the element most central to broad feasibility of what

Martin Odersky calls "categorical programming" is the for or do construct,

by which an involution of closures presents itself in procedural guise.

Beyond dispute, when the illusion works, the code looks beautiful.

It is also claimed that, when the beautiful code compiles, it is probably correct,

because otherwise typechecking would have failed. I'm dubious of that point

but prepared for argument's sake to accept its truth given a sufficiently clever

typechecker. However, with an insufficiently clever typechecker - one whose

inferencing abilities require frequent nudges, one that disagrees with its multiple

personalities across the tool-chain - you are forced to mentally re-ravel the tidy stack

of left-arrows into the underlying mess of combinators to begin to guess what went wrong.

Of course I enjoy that sort of thing. Or anyway I find it conveniently distracting sometimes.

I close with my 2nd or 3rd favorite quotation from Oscar Wilde:

Jack. Is that clever?

Algernon. It is perfectly phrased! and quite as true as any observation in civilised life should be.

Jack. I am sick to death of cleverness. Everybody is clever nowadays. You can’t go anywhere without meeting clever people. The thing has become an absolute public nuisance. I wish to goodness we had a few fools left.

-

A word that is frowned upon my employer as ostensibly meaningless. It's not. I use it here in the sense of the manifestation of verbosity, excessive use of many words were few - perhaps none - would have sufficed.] ↩

Comments

comments powered by Disqus