Were you at Clojure/conj in Washington last week? If so, hello again. Wasn't that a great conference? If not, head to Clojure TV, where all the talks are ready for streaming. Assuming some moderate level of Clojure obsession on your part, I couldn't recommend skipping any of them, so the full catch-up might take you a while, but there are two in particular that I strongly recommend.

Avoiding altercations

The first is actually the very last talk of the conference. Brian Goetz, whom you may have encountered previously as the author of Java Concurrency in Practice or heard of as the Java Language Architect at Oracle, spoke about Stewardship: The Sobering Parts. To talk about Java before an audience known to derive a certain amount of enjoyment from deriding the language takes mettle, nuance and wit, all of which he displayed in abundance. Of course, he wasn't trying to convert an audience of Lispers to worship at the altar of curly braces, but to convey some sense of the responsibility you have when 9 million programmers use your language and a good number of the world's production systems are running in it.

Clojure's isn't (yet) in that position but one that was, at least in proportion to the total amount of code at the time, is COBOL. I'm not sure you can find anyone to defend it from an aesthetic or theoretical standpoint, but it was a very good fit for the computers of the day and for the purposes to which they were put.

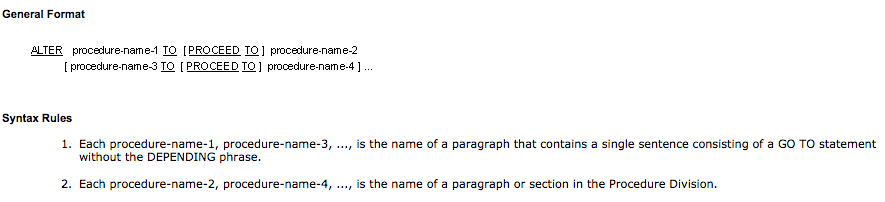

If you feel faint at the sight of GO TO statements, it might be time for a stiff drink, because

we're going to talk about something even worse. That might be hard to imagine from the vantage point

of our enlightened age, but it's true. Sometime in the 1980s, the ANSI standards committee for COBOL

introduced the ALTER statement:

The thinking apparently was that if you have a good working memory and like puzzles, ordinary spaghetti

code isn't going to be challenging enough, so you need self-modifying spaghetti code. What

ALTER did was modify the destination of a specified GO TO statement, so that, from now on,

it would go somewhere else.

Brian thinks that this was the precise moment when COBOL jumped the shark.1 It had had a pretty good run

and a decent remaining cadre of developers, but the ALTER statement pretty much guaranteed that

any codebase under active development would eventually become unmaintainable.

Which brought him to this immortal line:

Java's ALTER statement is only one bad decision away.

Although it's hard to type with meat-axes instead of hands, I'm going to attempt a minor refactoring:

${LANG}'s ALTER statement is only one bad decision away.

Always be Composing

Does this section title seem too sensible and eloquent for me to have thought of myself? It is! Actually, it's the title of another great talk, one by Zach Tellman, and the title isn't even the best part. There's this:

Composability isn't whether people can put two things together, but whether they are willing to.

I'm tempted to do that thing people do in blogs where they say something, and then say they're going to repeat it because it's so great, and then repeat it. But shucks, I've never had the panache to pull off something like that, and, anyway, I haven't explained yet what it means.

Composition, broadly speaking, is what emerges when you can combine simple pieces in different ways to produce complex and interesting results. Zach illustrated this with a Sierpinski gaskets,2

exploring the tradeoffs as you model them as macros, code or data. Other things you can compose are Lego, phonemes and cold-cuts. For example, with Lego and a bit of discipline, you can make really complicated things.

Lego is much more popular than this other thing called Soma, which Soma programmers say is unfair, because they can make a dog too:

And, if they could get hold of enough pieces, plus some glue, an instruction manual in English, and a dependency management system, they could probably make great big, complicated dogs. Unfortunately, some people think of Soma as an academic block system and complain that making things with it is like solving a puzzle.

One of the most well known FP abstractions is of course the chaining of sequence operations,

(->> [1 2 3 4] (map inc) (filter even?) (mapcat #(range % (+ 5 %))) sort)

which is clicks at such a deeply intuitive level that you can easily understand the same algorithm in languages you ostensibly don't know.

Which brings us to transducers. It would be extremely unfair to leave Zach open to charges of unsubtlety, so I'm going to quote the relevant section in full:

Transducers are a very specific sort of composition. They're not a higher order of abstraction. They're actually very narrowly targeted at a certain kind of operation we're trying to do. You can't compose a transducer with any function. And you can't even transduce with every kind of sequence operation. Certain operations such as sort, which require us to realize the sequence are not represented as transducers, so if we're looking at our code and we have some sort of great big chain of operations, one of which is one of these non-transducible things, we now need to separate that out, have certain things applied as a transducer and apply them, then apply these other operations separately. This is not to say that transducers are bad. I think they're a really interesting and very, very useful tool, but I do think it's interesting to look at how these are going to shape the tutorials for Clojure, because there's a nice sort of simplicity and immediacy to be able to say, map a function over my sequence. I think that it would be a little bit difficult for someone who's entirely new to Clojure to have their first operation over a sequence to be defined as a transducer.

Chronologically, the first quote comes after this one. There was some space in between them, but, for me, they resonated together.

History, Nomenclature and Ambition

Transducers are an ambitious concept with an interesting history. Well, it's history in the sense of 2 1/2 exciting years since

the original reducers post,

but we live in interesting times.

That post used transducers but called them by other names, including "reducer fn" and "reducing fn", while the

usual binary function passed to reduce was (and, in

reducers.clj,

still is) referred to as a "combining function."

In any case, transducers are not yet the main event.

The next installment arrived roughly a year later, with

Transducers are Coming.

I think the word "important" can legitimately be applied to that post, but perhaps not the word "clear."

Among other things, it introduced the word "transducer" as a synonym for one of the few terms

for them that hadn't actually been used,

viz. "reducing function transformer." At some point, I attempted

a glossary, which seems to have been consulted quite a few times

without critical comments, so there's a possibility that might have been accurate. I use the past

perfect, because today, at the very least, it is no longer complete, as it doesn't mention the

latest innovation, educers.

Clarity and hospitality

It is true that I enjoy making fun of other people's prose, but, in my defense (1) I always introduce a solecism or two of my own, to keep credibility down to unintimidating levels and (2) it doesn't really matter what I say. As an Unimportant Person, I have the luxury of expressing myself in a manner that amuses me, and if the barrage of flippancy causes someone to stop reading, the world will continue to turn. When we're talking about core features of an important computer language, the stakes are higher. It is a problem that many experienced Clojure programmers are demonstrably confused by transducers; it would be a bigger problem if we didn't care; it would be truly tragic if it got to the point where newcomers to Clojure were welcomed -- as they are to certain other languages -- with the belittling advice to come back after training their brains.

The expressivity-ink ratio

The compositional power of a system is inversely proportional to the complexity of its description.

Kernighan and Ritchie in its first edition comprised a mere 228 pages, and they were actually all you needed to

start coding. The successors of C may have had more expressive power, but that increased expressivity came at a

huge cost in confusion and verbiage. Clojure, of course, cheats by being a lisp variant, but even among lisps it

stands out for its crystalline internal coherence. It simply makes so much sense that the necessary textual documentation

can be contained in mouseovers (or C-c C-d d or what have you). As with C, a small amount of information lets

you start coding; better than C, the resulting code has a good chance of being correct.

Whatever you think of transducers, it's hard to argue that they don't require a lot of explanation. At the very least, they spawn tutorials at a pretty good clip.3 We're not quite at monadic levels, but the level of ambient perplexity represents a shift for Clojure. Perhaps some of the confusion will dissipate once the professional scribes pump out their next editions, but it seems possible to me that some is in the nature of the transductional beast. For example, there are quite a few functions involved in the transducer --

- The transducer is itself a function. (Not really a complaint.)

- It's a function of a function. (Not a complaint either. This is a functional language after all.)

- The function returns a function.

map-like transducers are functions of functions returning a function of a function that returns a function.

-- of which the last is required to treat its result argument in a somewhat ritualistic fashion, passing it about in

certain ways while never looking at it.

The sides of our intent

Transducers address two weaknesses of the standard approach to chaining operations over collections. First, they allow composition of sequence operations in a manner independent of the collection or delivery mechanism holding the sequence. Second, they are more efficient than the usual methods, because execution does not require reifying multiple (possibly lazy) collections to feed each other, and the transformations are in sufficient proximity that optimizer might be able to do something with them.

There are also at least two features that could be seen as advantageous but may not be as fundamental.

First, the composition can be accomplished, literally, with comp, because transducers are

functions of arity 1. Second, transducers are optimally suited for use in reduction operations,

since what that function does is transform a reducing (or combining) function.

Is it important to do all these things at the same time? Is there, at some mathematical level, profundity in that we could do so? Perhaps, to both questions. But I don't think the answer is obvious.

Modest proposal

Actually two proposals, the more specific of which is indeed modest, offered only as an example of the sort of thing one might think about. The broader proposal, that we should take a step back and think about things, takes a bit of gall. To make up for that, I promise not to talk about type systems.

Rewind a couple years, and ask yourself what, to a functional programmer, is the most obvious way to transform a sequence of values into another sequence, containing zero or more elements for each original value. Wait, I know. It's this:

(defn one-to-many [x] (if (something x) [(foo x) (bar x) "whiffle"] []))

(mapcat one-to-many my-seq))

;; composition

(->> my-seq

(mapcat one-to-many)

(mapcat another-to-many))

The pattern is pretty well enshrined; it is not controversial. Now, suppose you had the additional requirement

that the transformations might potentially depend on previous values. One obvious possibility is a function that

takes a state as well as an input and returns both the output values and the new state, e.g.

[ [out1 out2 ...] new-state]:

(defn one-to-many-with-state [x s]

(if (something x s)

[[(foo x)] (inc s)]

[[(bar x) (bar x)] (dec s)]))

So far, this is all in the category of the obvious, and, happily, everything that follows is in the category of internal implementation details that need not be obvious.

mapcatreduce

At this point, we're going to need something other than mapcat to apply our function.

This something is going to be a hybrid of mapcat and reduce, where the former

gets applied iteratively to each input, while the latter accumulates state.

This will do for now:

(defn translate-seqable [tl state inputs]

(let [[input & inputs] inputs

[outputs state] (tl input state)]

(if inputs

(concat outputs (lazy-seq (translate-seqable tl state inputs)))

(concat outputs (first (tl nil state))))))

The last line introduces a small twist: we're going to invoke tl one last time with a nil

value, so it can clear out any residual state.

We can write a similar mapcat/reduce to translate the contents of an async channel:

(defn- translate-channel [tl s0 c-in & [buf-or-n]]

(let [c-out (chan buf-or-n)]

(async/go-loop [state s0]

(let [v (<! c-in)]

(if-not (nil? v)

(let [[vs state] (tl v state)]

(async/onto-chan c-out vs false)

(recur state))

(let [[vs _] (tl nil state)]

(async/onto-chan c-out vs true)))))

c-out))

Both of these could probably be written more efficiently, perhaps directly in Java, but let's not bother with that yet.

Example translators

The finishing logic necessitates checking for

a nil input, which under most circumstances is just wrapping it in an (if-not (nil? v) ...).

The standard mapping and predicate filtering ignore state,

(defn tmap [f]

(fn [v _] (if-not (nil? v) [[(f v)] nil])))

(defn tfilter [p]

(fn [v _]

(if-not (nil? v)

[[(if (p v) [v] [])] nil])))

while the canonical deduplicator uses it to hold a scalar:

(defn dedup [v state]

(if-not (nil? v)

[(if (not= v state) [v] []) v]))

Duplication does not use state, but does make use of the ability to return multiple values:

(defn dup [v _]

(if-not (nil? v) [[v v] nil]))

For fun, we can implement a sorting translator that just swallows input until finishing time, when it releases the sorted input in one go:

(defn tsort [v s]

(if-not (nil? v) [[] (cons v s)] [(sort s) nil]))

(To be fair, one can do this with transducers too.)

composition

Suppose, next, that we would like to be able to compose multiple

one-to-many-with-state-like functions into a single function to pass to

one of these translates. This single function will consume a state that is

actually a vector of the states of the functions that compose it. The only

comments I'll make about the following proof-of-concept implementation are

that (1) it can undoubtedly be done more efficiently, (2) its complexity is

not something the user needs to think about:

(defn tcomp

"Compose stateful translators; state in the returned translator is a vector of composed states."

[& tls]

(fn [input states]

(loop [[tl & tls] tls ;; loop over translators

[s & ss] states ;; and states

input [input] ;; accrue flattened inputs

s-acc []] ;; new states

(if-not tl

[input s-acc ] ;; done

(let [[input s] (translate-seqable tl s input)]

(recur tls ss input (conj s-acc s)))))))

protocols

Finally, we wrap our translate-whatevers in a protocol, so we can just call translate, irrespective

of the container:

(defprotocol ITranslatable

(translate* [this s0 tl]))

(extend-protocol ITranslatable

clojure.lang.Seqable

(translate* [this s0 tl] (translate-seqable tl s0 this))

clojure.core.async.impl.protocols.Channel

(translate* [this s0 tl] (translate-channel tl s0 this)))

(defn translate [translator input] (translate* input nil translator))

rah

For completeness,

(def blort (tcomp (tmap inc) (tfilter even?) dedup (tmap inc) tsort))

(translate blort [3 1 1 2 5 1])

;;(3 3 5 7)

(def c (chan))

(def ct (translate blort c)

(async/onto-chan c [3 1 1 2 5 1])

(<!! (async/into [] ct))

;; [3 3 5 7]

As bonuses, we never have to deal with mutability, and we don't have to take

any special care to do exactly the right thing with those result values we're not

supposed to look at.

Question and Answer

Do you hate transducers?

No, I think they're cool.

So, what are you complaining about?

Maybe they're too complicated. Yes, I can wrap my head around that complexity, but that's the sort of thing I do for fun. Most aspects of Clojure not only do not require a love of complexity but appeal to people who loathe it. Transducers stand out as an exception.

What's so complicated?

I answered this above, but, to summarize, the reduce heritage seems to stick out more than necessary.

Didn't Christophe Grand already make these points?

He made some of them. As I recall (his site is down at the moment),

he too objected to the visibility of reduce semantics in contexts that were really about transformation.

However, he reached somewhat different conclusions from mine, in the end proposing that every

transducer-like thing be intrinsically stateful and mutative, in the interests of simplicity and efficiency.

The arguments for doing this could be applied nearly anywhere we use a functional style, which implies

strong arguments against it that are familiar enough not to require rehearsal here.

Your solution is inefficient.

True, but it's not supposed to be a solution. It's somewhere between a rumination and a proof of concept. Something to think about. If we were to go in a similar direction, there are many possible optimizations. For example, I don't see anything wrong with violating functional purity within core language facilities; rather, what bothers me is when users are regularly required to do so.

Comments

comments powered by Disqus