TL;DR

- As noted earlier, transducers can be properly annotated in Clojure using

core.typedand they probably should be. - But... there are a few tricks necessary to make it work.

- Transducers in Scala require tricks too, but different ones.

- Oh, but they're so lovely in Haskell.

Update 2015-01-12

Were you led here by Clojure Gazette? Eric Normand is usually more discriminating, but don't worry, this will only waste a little of your time. Per the previous batch of updates, just below, and various subsequent posts on more or less the same topic, it should be clear this wee bagatelle is not meant to be authoritative. In particular, nobody should try the approach I use with Scala here; the more obvious and better one is at the very beginning of the fourth post in the series; a somewhat zanier one occupies the remainder of that post.

This series of transducer posts has helped me clarify some thoughts on referential transparency, bug detection, type systems and language evolution. If you are morbidly curious about all the possible ways a person can be wrong on these topics, you might enjoy reading them:

- A glossary of transducerish terms.

- (You are here.)

- Initial fretting about stateful transducers.

- State+type for transducers (in Scala).

- Rue and despond.

Updates 10-29 20:00 (thanks, Twittersphere)

-

I am apparently confused about the difference between universal and existential types. Happily, I don't seem to be alone in this, but I promise to figure it out anyway...

-

It would probably be more natural (and certainly more concise) to stick to the trait/apply solution in Scala than to try to emulate a Haskell style, interesting though the attempt may have been. Under the hood, the complicated functions are still classes with an apply method anyway.

-

My Haskell type doesn't acknowledge that transducers might have state. The Scala and Clojure versions don't either, but that's more acceptable in their cultures.

Transducers

I won't explain transducers here. The canonical introduction is Rich Hickey's blog post, with further explanation in his Strangeloop talk. I contributed a brief glossary, which may possibly be helpful.

Why bother with typed transducers in Clojure

At the end of an earlier post, I noted that, despite

some controversy

on the subject, transducer's type can be defined with core.typed (I'll walk through this a bit further down,

so don't panic...)

(t/defalias ReducingFn

(t/TFn [[a :variance :contravariant]

[r :variance :invariant]]

[r a -> r]))

(t/defalias Transducer (t/TFn [[a :variance :covariant]

[b :variance :contravariant]]

(t/All [r] [(ReducingFn a r) -> (ReducingFn b r)])))

in a manner fairly evocative of the way you'd do it in Haskell:

type ReducingFn a r = r -> a -> r

type Transducer a b = forall r . ReducingFn a r -> ReducingFn b r

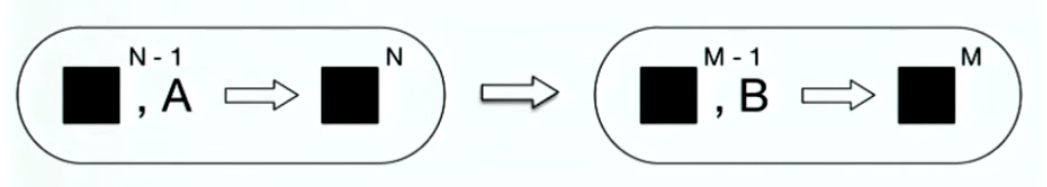

While these representations may be more explanatory (to some, anyway) than the graphical illustration

in Rich's talk, explanation is not the main point. Neither is the triumphal riposte that transducers are yet another thing that isn't a good example of the superiority of dynamic typing.1

With or without types, you're going to figure out transducers eventually, and I doubt you're going to understand them by types alone. It might even be better to go untyped, since a good flailing of trial and error can have educational value.

That's is a less attractive option when writing code that's meant to do something real,

and that's where a type system can be helpful. If you use transducers - and you will, because they're

incredibly powerful - you will at some point be confounded by mysterious bugs of your own creation. You will

get confused by the funny reversed order of composition. And then you will stare, despairingly,

at long stack traces containing multiple anonymous functions. Then you'll festoon your code with more

and more printlns (or, if you're fancy, logging macros) until the head-slap moment occurs.

Slapless

I'll get into the details of the above annotations in a bit, but for now just take them as given. Accept

also that, for some reason, there's a special composition function compt just for transducers.

Our artificial goal is going to be to take a sequence of strings representing integers, like

["1" "2" "3"], parse them, multiply them by something and then, for each integer calculate

$\sqrt[n]{2}$, and finally add those roots up. Here are my three transducers (ignoring, for simplicity,

the zero- and one- argument alternatives for the returned function):

(t/ann t-parsei (Transducer t/Int t/Str))

(defn t-parsei [rf]

(fn [result input]

(rf result (Integer/parseInt input))))

(t/ann t-repn (Transducer Number Number))

(defn t-repn [rf]

(fn [result input]

(rf (rf result input) input)))

(t/ann t-root (Transducer Double Number))

(defn t-root [rf]

(fn [acc in]

(rf acc (pow 2.0 (/ 1.0 (double in))))))

Taking the Transducer type function as given, these annotations make sense. The

first transducer transforms a function that reduces over integers to one that reduces over

strings; the last transforms a function that reduces over doubles to one that reduces over

integers; and the one in the middle doesn't change the type at all.

If all goes well, I should be able to compose the transducers, apply them to the

+ reducing function and reduce,

(reduce ((compt t-root t-repn t-parsei) +) 0 ["1" "2" "3"])

but this doesn't get past type-checking:

Domains:[x -> y] [b ... b -> x]

Arguments:

[[t/Any Number -> t/Any] -> [t/Any Number -> t/Any]] [[t/Any t/Int -> t/Any] -> [t/Any t/Str -> t/Any]]

Squinting at the last line slightly,

[ Number ] -> [ Number ]] [[ t/Int ] -> [ t/Str ]]

we see the problem: the transducers are reversed. That's an easy mistake to make, with all those functions of functions strewn about, but it's also easy to fix, once we have a timely and specific error. (I won't pretend that it's a particularly elegant error, but, once you get used to reading it, it's a hell of a lot more timely and specific than an exception and stack trace at runtime.)

Back on the straight and narrow, we get the result we wanted:

user> (t/cf (compt t-parsei t-repn t-root))

(t/All [r] [[r Double -> r] -> [r String -> r]])

user> (reduce ((compt t-root t-repn t-parsei) +) 0 ["1" "2" "3"])

9.348269224535935

Type functions definitions

So, ReducingFn and Transducer seem pretty useful. How did we make them?

(t/defalias ReducingFn

(t/TFn [[a :variance :contravariant]

[r :variance :invariant]]

[r a -> r]))

The TFn indicates that we're making a type function, i.e. a function of types that returns another type. The two types it takes are a (the type we are

reducing over) and r (the type we're reducing to). Since we ought to be able to substitute a function that knows how to consume Numbers in general

for a function that

will encounter only Ints, the ReducingFn is contravariant in a, by the Liskov substitution principle. On the other hand, the exact opposite is true for

the value returned by a function: if the recipient wants Int, it's not going to be happy with any old Number, but it could handle a Short or some other

subtype. As r appears both as argument (suggesting contravariance) and return type (suggesting variance), it has to be invariant.

The Transducer type function returns the type of a function that consumes one ReducingFn and returns another.

(t/defalias Transducer (t/TFn [[a :variance :covariant]

[b :variance :contravariant]]

(t/All [r] [(ReducingFn a r) -> (ReducingFn b r)])))

If someone is expecting a Transducer that

consumes a particular kind of ReducingFn, they should be happy with a Transducer that consumes a supertype of that ReducingFn, i.e. Transducer is

contravariant in the type ReducingFn used as its argument.

But, since ReducingFns are themselves contravariant in the type they reduce over, the Transducer must be covariant in a.

By contrast, the Transducer is covariant in the type of ReducingFn it returns, but since the ReducingFn is contravariant in the

type it consumes, the Transducer must be contravariant in b.

Phew. It might come as a relief that the Transducer doesn't give a damn about the type r being reduced to. To advertise our apathy,

while at the same time promising that we won't mess with r, we need the All keyword, indicating a so-called existential type.

(t/defalias Transducer (t/TFn [[a :variance :covariant]

[b :variance :contravariant]]

(t/All [r] [(ReducingFn a r) -> (ReducingFn b r)])))

Tricks and compromises with typed Clojure

You may have wondered why we had to define a special t-repn for

repeating numbers? We could in fact have created a more general

version

(t/ann ^:no-check t-rep (t/All [a] (Transducer a a)))

with (since Clojure is still dynamically typed underneath our

annotations) exactly the same definition. However, when

we actually use t-rep, we need to inform typed Clojure exactly which

existential variant we really want, by instantiating it:

(t/cf (compt t-parsei (t/inst t-rep t/Int) t-root))

This is because typed Clojure only performs local type inference. You can read more about the limitation in this post and in the references it contains, but the gist is that nothing is ever inferred by working backwards from the return type of a function, so you need to provide a crutch. Most languages with some kind of automatic type inference perform the local variety; a few, like OCaml and Haskell, do a much fuller job; and of course the vast majority of languages do none whatsoever.

The other oddity is one I mentioned earlier: we're not using Clojure's

normal comp. Why? Well, consider the type of a simple composition

function:

(All [a b c] [[b -> c] [a -> b] -> [a -> c]])

That makes sense. The first function to be applied converts from a to b, and then

the second converts the b to a c. Now, let's compose 3 and 4 functions:

(All [a b c d] [[c -> d] [b -> c] [a -> b] -> [a -> d]])

(All [a b c d e] [[d -> e] [c -> d] [b -> c] [a -> b] -> [a -> d]])

The pattern is pretty clear, but there isn't an obvious annotation that would capture the type

of all variadic possibilities. Instead, core.typed suggests

(All [x y b ...] [[x -> y] [b ... b -> x] -> [b ... b -> y]])

which means the 2nd and succeeding functions all have the same signature. Even this limited composition

type challenges core.typed if the functions are even slightly polymorphic. E.g.

(t/cf (comp identity identity))

will fail with an error, roughly like:

user> (t/cf (comp identity identity)

Type Error polymorphic function comp could not be applied to arguments:

Polymorphic Variables: a b c

Domains: [b -> c] [a -> b]

Arguments: (t/All [x] [x -> x]) (t/All [x] [x -> x])

As noted above, we are allowed to instantiate a specific version of the polymorphic type, so

user> (t/cf (t/inst identity Long))

[Long -> Long]

user> (t/cf (comp (t/inst identity Long) (t/inst identity Long)))

[Long -> Long]

In summary:

(comp identity identity)fails, because identity is polymorphic(comp (t/inst identity Long) (t/inst identity Long))succeeds, because we have instantiated a specific type.(comp (t/inst identity Long) (t/inst identity Long) (t/inst identity Long))fails again, because comp is called with three arguments.

Haskell's type inference is of course more sophisticated, but it also makes the problem easier by eschewing variadics in favor of currying. There's one composition function, which takes one argument and happens to return another function:

(.) :: (b -> c) -> (a -> b) -> a -> c

There are thus at least two reasons why Haskell can easily deduce:

id :: a -> a

(id . id . id) :: c -> c

First, it does non-local type inference; second, it doesn't have to deal with variadic functions.

A slightly better variadic comp

We can't do much about local type inference, but we can write a comp that lets core.typed check

an arbitrary series of composed transformations. The trick, as usual when we need to go easy on the

type checker, is to use a macro to simplify what it needs to check:

(defmacro comp* [& [f1 f2 & fs]]

(if-not fs

`(comp ~f1 ~f2)

`(comp ~f1 (comp* ~f2 ~@fs))))

so (comp* c->d b->c a->b) unwinds to (comp c->d (comp b->c a->b)), and failure #3

now succeeds:

user> (t/cf (comp* (t/inst identity Long) (t/inst identity Long) (t/inst identity Long)))

[Long -> Long]

Now, the general transducer (Transducer a b) is of course polymorphic, but even a specific-

seeming one like t-repn (which is (Transducer Long Long)), still has that

(All [r] ...), polymorphism in the type being reduced to.

Thus, (comp t-repn t-repn) will fail with the now familiar "could not be applied to arguments" error.

Fortunately, we know that the transducer doesn't care at all about r, so, without loss of

actual generality, we can lie:

user> (t/cf (comp (t/inst t-repn Any) (t/inst t-repn Any)))

[[Any Number -> Any] -> [Any Number -> Any]]

Having lied, we can make it right again by casting the polymorphism back in:

(t/ann ^:no-check lie-again

(t/All [a b] [[[t/Any a -> t/Any] -> [t/Any b -> t/Any]] ->

(t/All [r] [[r a -> r] -> [r b -> r]])]))

(def lie-again identity)

so that:

user> (t/cf (lie-again(comp (t/inst t-repn t/Any) (t/inst t-repn t/Any))))

(t/All [r] [[r Number -> r] -> [r Number -> r]])

Now we combine the two lies and the de-variadification into a single macro

(defmacro compt [& tds]

(let [its (map #(list 't/inst % 't/Any) tds)]

`(lie-again (comp* ~@its))))

and, as demonstrated way above, we can compose transducers. Now you know why we need compt.

It's far prettier in Haskell

There's not too much to say about this. While the Transducer type definition

type ReducingFn a r = r -> a -> r

type Transducer a b = forall r . ReducingFn a r -> ReducingFn b r

is essentially the same as in Clojure, everything else is easier. We can write fully general transducers

t_dub :: Num a => Transducer a a

t_dub f r b = f r (2 * b)

t_rep :: Transducer a a

t_rep f r b = f (f r b) b

t_parse :: Read a => Transducer a String

t_parse f r s = f r $ read s

t_root :: Transducer Double Integer

t_root f r i = f r $ pow 2.0 (1.0/(fromInteger i))

and compose them with no special effort.

(t_parse . t_rep . t_dub . t_root) :: ReducingFn Double r -> ReducingFn String r

(foldl ((t_parse . t_rep . t_dub . t_root) (+)) 0.0 ["1","2","3"]) :: Double

Scala is not Haskell either

Let's start out unambitiously. Trying to compose the identity function in Scala

seems to run into the same problem as in Clojure

scala> identity _ compose identity

<console>: error: type mismatch;

found : Nothing => Nothing

required: A => Nothing

identity _ compose identity

^

but what's going on here is a slightly different problem. While identity is defined

polymorphically as identity[A](a:A):A, by the time we see it in the REPL, all type

information has been erased. (We deliberately erased it, by instantiating the function with

_ in a context where no other type information is available.)

If we put it back explicitly, composition works, and the composed function can itself be used polymorphically:

scala> def ia[A] = identity[A] _ compose identity[A]

ia: [A]=> A => A

scala> ia(3)

res39: Int = 3

scala> ia(3.0)

res40: Double = 3.0

We can chain compositions in a manner that looks a bit like Haskell

scala> identity[Int] _ compose identity[Int] compose identity[Int]

res33: Int => Int = <function1>

but is really quite different. Scala's compose is a method of the Function1 class rather than

a standalone function, as this less sugary rendition makes clear:

scala> (identity[Int] _).compose(identity[Int] _).compose(identity[Int] _)

res36: Int => Int = <function1>

That's OK. Scala's OO nature gives us a set of tools completely different from those we got from Clojure's homoiconicity, but they can be deployed for qualitatively similar purposes - in this case, safe and reasonably attractive transducers.

In fact,

I've seen transducers in Scala implemented as a trait, which then delegates to a virtual transform method, e.g.

type ReducingFn[A, R] = (R,A) => R

trait TransducerT[A, B] {

def transform[R]: ReducingFn[A, R] => ReducingFn[B, R]

...

}

To make TransducerT act more like a function, we would add an apply method, and to

make chained composition pretty, a compose method:

def apply[R] = transform _

def compose[C](t2: Transducer[C, A]): Transducer[C, B] = {

val t1 = this

new Transducer[C, B] {

override def transform[R]: (ReducingFn[C, R]) => ReducingFn[B, R] = rf => t1(t2(rf))

}

}

This will work, but we it's more amusing to try to define transducers as existential types,

using the semi-mystical forSome annotation, which Scala uses for the same purpose as

Haskell's forall and typed Clojure's All:

type ReducingFn[-A, R] = (R,A) => R

type Transducer3[+A,-B,R] = ReducingFn[A,R] => ReducingFn[B,R]

type Transducer[+A,-B] = Transducer3[A,B,R forSome {type R}]

(To be honest, I don't know if it's possible to do this without the intermediate ternary type.)

To assist in creating simple transducers that just modify individual elements of cargo, we

write mapping, again with an intermediate ternary type,

def mapping3[A,B,R](f : A => B) : Transducer3[B,A,R] = { rf : ReducingFn[B,R] =>

(r : R ,a:A) => rf(r,f(a))}

def mapping[A,B] = map3[A,B,R forSome {type R}] _

which we use like this:

val t_parsei: Transducer[Int, String] = mapping { s: String => s.toInt}

def t_root2 : Transducer[Double,Int] = mapping { i : Int => Math.pow(2.0,1.0/i)}

Nice, so far, but let's try reducing something easy:

scala> println(List("1","2","3").foldLeft[Int](0)(t_parsei (_+_)))

<console>:12: error: type mismatch;

found : Int

required: String

Huh? Maybe it's having trouble understanding _+_:

scala> println(List("1","2","3").foldLeft[Int](0)(t_parsei {(i:Int,j:Int)=>i+j}))

<console>:12: error: type mismatch;

found : (Int, Int) => Int

required: TransducerExistential.ReducingFn[Int,R forSome { type R }]

(which expands to) (R forSome { type R }, Int) => R forSome { type R }

Different but not better. Maybe it will work to cast explicitly to the ternary type:

scala> println(List("1","2","3").foldLeft[Int](0)(t_parsei.asInstanceOf[Transducer3[Int,String,Int]] (_+_)))

6

But that's a little ugly, and whenever something is even slightly ugly in Scala, you introduce an implicit to

make it confusing instead. Hence

implicit class TransducerOps[A,B](t1 : Transducer[A,B]) {

def transform[R](rf : ReducingFn[A,R]) = t1.asInstanceOf[Transducer3[A,B,R]](rf)

}

to coerce automatically after hoisting the Transducer into

the TransducerOp container class.

Since we've already crossed the Rubicon, let's bring some slick Unicode along for the ride:

def ⟐[R] = transform[R] _

Now

scala> println(List("1","2","3").foldLeft[Int](0)(t_parsei ⟐ (_+_)))

6

Finally, we're going to want chained function composition, so let's put a method for that, plus a nifty symbol, into the implicit class

def compose[C](t2 : Transducer[C,A]) : Transducer[C,B] = comp(t1,t2)

def ∘[C](t2: Transducer[C, A]): Transducer[C, B] = compose(t2)

so that:

scala> println(List("1", "2", "3").foldLeft[Double](0.0)((t_parsei ∘ t_repeat ∘ t_root2) ⟐ {(x:Double,y:Double) => x+y}))

9.348269224535935

I suspect that there will be more trickery further down the road, as we flesh out the standard

library of transducer functions. To get sequence to work, I ended up performing multiple

coercions:

def sequence[A, B](t: Transducer[B, A], data: Seq[A]): Seq[B] = {

val rf1: ReducingFn[B, Seq[B]] = { (r, b) => r :+ b}

val rf2: ReducingFn[A, Seq[B]] = t(rf1.asInstanceOf[ReducingFn[B, R forSome {type R}]]).asInstanceOf[ReducingFn[A, Seq[B]]]

data.foldLeft[Seq[B]](data.companion.empty.asInstanceOf[Seq[B]])(rf2)

}

scala> println(sequence(t_parsei ∘ t_repeat ∘ t_root2, List("1", "2", "3")));

List(2.0, 2.0, 1.4142135623730951, 1.4142135623730951, 1.2599210498948732, 1.2599210498948732)

Conclusions

The type of one transducer is not obscure, and it's not much harder to understand than a callback. However, once you combine several transducers into a working program, the business of reconciling and checking their types can be challenging. Of the languages I know, only Haskell handles it gracefully. Building an entire system in Haskell might be intimidating, but, the transducer bits will be - bracing ourselves for a word not normally applied to Haskell - easy.

Transducers were invented for and clearly work in unityped Clojure,

but I find myself wondering if they'll be one function abstraction too

far for projects large enough to require many developers, and the

argument that I find them beautiful might not carry the day. I do

believe that a capable type framework would at least reduce the

frequency of bugs, but typed Clojure is not at the point where

telling someone to use it for transducers will obviously improve her or his life.

It does not seem to be the case that a little macro cleverness can

nudge the problem into

the core.typed sweet spot.

It was interesting to play with transducers in Scala, if only because not

many people have. Given the industrial efforts that have gone into Scala and the

centrality of type checking to the language, it's hardly surprising that it does a

better job than typed Clojure. But the margin of victory is slimmer than I would

have expected. Even with the latest release of the IntelliJ plugin, many type errors

didn't show up until a complete compilation. In general, once you get to

forSome and its ilk, there isn't a wealth of straightforward advice available.

(Hie thee, of course, to the Twitter-curated

tutorials, which are about as good as it gets.)

-

I'm pretty sure I heard someone say this. ↩

Comments

comments powered by Disqus