How strange to think that, a mere week ago, the world had not yet heard my public pronouncement that transducers ought to be stateless. True, the media frenzy has died down a bit, but in its stead comes the quiet awareness that life will never be the same. Anyway, that's the way I like to think about it.

TL;DR

- Storing state in the transducer makes it mutable, which might be unfortunate on general principles. In any event, it interferes with the metaphor of transducers as recipes for transformation and arguably makes code more difficult to understand.

- A natural place to store state, such as the previous value, is in the accumulating reduction. After all, the reduction is already changing.

- So we make the reduction value a wrapper around the original reduction of interest

(e.g. a sum) and the internal state being maintained by the transducer, e.g.

RH[Double,Option[S]]. - Since state-aware transducers can be composed, there may be multiple layers of nesting, with different types of state at each level, so

- the the type of the reduction value is also nested, e.g.

RH[RH[RH[R,Option[S1]],Option[S2]],Option[S3]]. - The type of the transducer must encode not the type of the reduction value, but the way it will modify the type of the reduction.

- The composition of multiple modification encodings seems like it might be nontrivial.

- BUT: Using parameterized type projections in Scala, we can write what are effectively functional programs to compute the new type of the reduction given the type of the transducer and to compute the new type of a transducer composed from others.

- The gussying up process will involve type constraints (nice!), implicitous fiddling (uh huh ...) and manifests (hmmf).

We will use funny symbols!

=:= ⟐ # ∘

(Only half of them gratuitously.)

Language Warnings

- Other than the traditional association of transducers with Clojure and the plea implicit in

this post for help in doing what I do here in

core.typed, there is no further Clojure to be found here. - Several posts ago, I kind of suggested

using

existentialuniversal types for transducers in Scala. Doing so would actually be a mistake. I'll instead be generalizing from one of the much improved implementations suggested to me by thoughtful readers.

Overture

But really, they shouldn't have state.

The bulk of this post is going to be about the combined ramifications of state-aware but stateless transducers and static typing, but I wanted to back a bit and spend a moment reinforcing the argument against stateful transducers.

In his Strangeloop talk, Rich Hickey used the metaphor of luggage handling. A transducer is akin to instructions to unbundle pallets containing multiple bags (mapcatting), or remove bags that smell like food (filtering), or wrap up ones that look like they'll break (mapping). Each instruction should be independent of whether the cargo arrives on a conveyor belt or the back of a tractor, and, furthermore, the combination of instructions should also be deliverable independent of transport.

In this light, he notes, it seems strange that we're ok with map, filter and mapcat/flatMap

methods specific to core.async channels or streams or lists. It's especially

bizarre that we tolerate transport-specific composition of such operations. I.e.,

in Scala-esque, we could have textually similar lines like

theList.map(wrapWithTape).filter(isSmelly)

theChan.map(wrapWithTape).filter(isSmelly)

where you cannot possibly factor out the stuff after the first . because it

embeds container methods.

With lovely transducers, it would be more like this:

val td = mapping(wrapWithTape) compose filtering(isSmelly)

theList.sequence(td)

theChan.sequence(td)

where td and the things that compose it are just reducing functions

(R,A)=>A. So far, so good.

Here are two more luggage examples:

- If you find consecutive bags belonging to the same person, wrap them up together.

- If you find a bag that's ticking, stop the entire operation.

Both of these involve state of different sorts - keeping track, respectively, of the previous bag's owner or

of whether a tick has ever been heard - but, when we transition back to computer-land,

the suggested location of that state differs. The halt order is conveyed by wrapping the

reduction value in a Reduced object, while bundling state is kept in an atom

inside the transducer itself.

Back to our story.

Last week I discussed applying Reduced approach to state of all kinds and

illustrated how it might be done in Clojure - conventional, untyped Clojure.

This week, it's Scala and static typing. Things get interesting.

Baseline transducer

Here. This is stolen outright from Paul Phillips and then slightly mangled:

type RFn[-A, R] = (R, A) => R

trait Transducer[+A, -B] {

def apply[R](f: RFn[A, R]): RFn[B, R]

def compose[C](t2 : Transducer[C, A]): Transducer[C, B] = {

val t1 = this

new Transducer[C, B] { def apply[R](rf: RFn[C, R]) = t1(t2(rf)) }}

def ∘[C] = compose[C] _

def ⟐[R] = apply[R] _

}

def map[A, B](f: A => B): Transducer[B, A] =

new Transducer[B, A] { def apply[R](rf: RFn[B, R]) = (r, a) => rf(r, f(a)) }

def sequence[A, B](t: Transducer[B, A], data: Seq[A]) = data.foldLeft(Seq[B]())(t(_ :+ _))

This clean, straightforward and uses rather than abuses type inference. I'm having trouble reconstructing the reasons I originally objected to this approach.

We can perform the usual tricks:

val t_parsei: Transducer[Int, String] = map(_.toInt)

val t_root2 : Transducer[Double, Int] = map(i => Math.pow(2.0, 1.0 / i))

def t_repeat[A, R] = new Transducer[A, A] { def apply[R](rf: RFn[A, R]) = (r, a) => rf(rf(r, a), a) }

println(sequence(t_parsei ∘ t_repeat ∘ t_root2, List("1", "2", "3")))

println(List("1", "2", "3").foldLeft(0.0d)(t_parsei ∘ t_repeat ∘ t_root2 ⟐ (_ + _)))

Now let's introduce state. Recalling the canonical Clojure example of a deduplicator,

(defn t-dedup []

(fn [xf]

(let [prev (volatile! ::none)]

(fn [result input]

(let [prior @prev]

(vreset! prev input)

(if (= prior input)

result

(xf result input)))))))

which store state in the volatile prev variable, let's write a Scala version in the obvious way,

def t_dedup[A] = new Transducer[A,A] {

var aPrev : Option[A] = None

def apply[R](rf:RFn[A,R]) : RFn[A,R] = {(r:R,a:A) => aPrev match {

case Some(a0) if a==a0 => r

case _ => {

aPrev = Some(a)

rf(r,a)

}}}}

by storing the previous value in the aPrev option. That looks OK, but there's trouble abrewing:

scala> val t = t_parsei ∘ t_dedup[Int]

scala> println(sequence(t,List("1","2","2","3")))

List(1, 2, 3)

println(sequence(t,List("3","2","1")))

List(2,1))

Eek. The transducer thought the first "3" in the second sequence was a repeat, because it still held state from the first sequence.

We can kick the can down the road a bit by initializing the option in the apply method,

thus allowing repeated uses of t:

def t_dedup[A] = new Transducer[A,A] {

def apply[R](rf:RFn[A,R]) : RFn[A,R] = new Function2[R,A,R] {

var aPrev : Option[A] = None

def apply(r:R,a:A) = aPrev match {

case Some(a0) if a==a0 => r

case _ => {

aPrev = Some(a)

rf(r,a)

}}}}}

val t = t_parsei ∘ t_dedup[Int]

println(sequence(t,List("1","2","2","3"))) // List (1,2,3)

println(sequence(t,List("3","2","1"))) // List (3,2,1)

but we'd still run into trouble if we had the nerve to re-use a transformed RFn:

val r = (t_parsei ∘ t_dedup[Int]) ⟐ {(x:Int, y:Int) => x + y}

println(List("1","2","2","3").foldLeft(0)(r))

println(List("3","2","1").foldLeft(0)(r))

gives us 6 and then 3. That won't do.

Wrapping state

Let's introduce a reduction holder, so we can store state alongside the reduction value,

case class RH[R,S](r:R, s: Option[S])

and rewrite t_dedup to use it:

def t_dedup[A] = new Transducer[A,A] {

def apply[R](rf : RFn[A,R]) : RFn[A,RH[R,A]]= {(rw,a) =>

rw match {

case RH(r,None) => RH(rf(r,a),Some(a))

case RH(r,Some(a0)) => if (a==a0) rw else RH(rf(r,a),Some(a))

}

}}

Now, we match against the accumulated reduction rather than against a mutable variable, which is

totally awesome... except that it won't compile, because apply has the wrong return type.

No big deal, we'll redefine the transducer

trait Transducer[+A, -B, S] {

def apply[R](f: RFn[A, R]): RFn[B, RH[R,S]]

...

}

so that function application always wraps up state. Sometimes, we won't need state, but we can always store something and then not use it.

A much more serious problem is that we no longer know how to write compose.

We know that the composition of Transducer[C,A,S] and Transducer[C,A,T] will

need to have an apply method with return type RFn[B,RH[RH[R,S],T]], as

the reduction is first wrapped with an S and then again with a T:

def compose[C](t2 : Transducer[C, A, T]): Transducer[C, B] =

new Transducer[C, B, DRAT_DRAT_AND_DOUBLE_DRAT ] {

def apply[R](rf: RFn[C, R]) : RFn[B, RH[RH[R,T],S]] = apply(t2(rf))

}

}

but exactly the type of Transducer thus produced is not just unclear but, as yet, inexpressible.

Somehow, its type will need to embed S and T in a manner that lets us deduce the

proper wrapped return type for apply. Furthermore, this should work no matter how many compositions

we put together.

Lists of Types

Having poked around in the shapeless codebase, we are... well, overall, we are sad and confused, because it doesn't seem possible to ever be smart enough to understand this. But, we can sort of pick up the idea that it's possible to build data structures with types:

sealed trait SList

sealed class SCons[S,T<:SList] extends SList

sealed class SNil extends SList

We can even write little programs, by declaring parameterized subtypes within the traits and having them "call" each other recursively:

sealed trait SList {

/* abstract */ type Concat[S2 <: SList] <: SList

}

sealed class SCons[S,T<:SList] extends SList {

type Concat[S2 <: SList] = SCons[S,T#Concat[S2]]

}

sealed class SNil extends SList {

type Concat[S2 <: SList] = S2

}

As long as all the types are known, the compiler will merrily expand SCons#Concat recursively,

stopping when it hits an SNil:

type S1 = SCons[Int,SNil]

type S2 = SCons[Double,SCons[String,SNil]]

type SS = S2#Concat[S1]

def x0 : SS = null.asInstanceOf[SCons[Double,SCons[String, SCons[Int,SNil]]]]

(The last line shows a handy trick for verifying the resolution of complicated types. If the cast didn't match the declaration, it wouldn't have compiled. There's a better trick, which we'll use later.)

So now we have half our answer. If we parameterize Transducer with SList, then

compose can simply return the concatenation:

trait Transducer[+A, -B, S <: SList] {

def compose[C,T <: SList](t2 : Transducer[C,A,T]) : Transducer[C,B,T#Concat[S]] = {

...}}

Now we have to figure out what the various apply methods return. Can we infer the proper

RH nesting from the SList? We can define another subtype to do the wrapping,

sealed class SCons[S,T<:SList] extends SList {

type Wrapped[R] = RH[T#Wrapped[R],S]

type Concat[S2 <: SList] = SCons[S,T#Concat[S2]]

}

sealed class SNil extends SList {

type Wrapped[R] = R

type Concat[S2 <: SList] = S2

}

so SCons[S1,SCons[S2,SCons[S3,SNil]]]#Wrapped[R] expands to

RH[SCons[S2,SCons[S3,SNil]]#Wrapped[R], S1]toRH[RH[SCons[S3,SNil]#Wrapped[R],S2],S1]toRH[RH[RH[SNil#Wrapped[R],S3],S2],S1]toRH[RH[RH[R,S3],S2],S1]

Building the state-aware Transducer

Thus armed, we can now write out the compose method, breaking it up to reassure ourselves that types line up:

trait Transducer[+A, -B, S <: SList] {

def apply[R](rf : RFn[A,R]) : RFn [B,S#Wrapped[R]]

def compose[C,T <: SList](t2 : Transducer[C,A,T]) : Transducer[C,B,T#Concat[S]] = {

val t1 = this

new Transducer[C,B,T#Concat[S]] {

def apply[R](rf: RFn[C,R]) : RFn[B,(T#Concat[S])#Wrapped[R]] = {

val rf2 : RFn[A,T#Wrapped[R]] = t2(rf)

val rf3 : RFn[B, S#Wrapped[T#Wrapped[R]]] = t1(rf2)

rf3.asInstanceOf[RFn[B,T#Concat[S]#Wrapped[R]]]

}}

}

That reassurance stops at the last line: equivalence of T#Concat[S]#Wrapped[R] and S#Wrapped[T#Wrapped[R]] seems to

be beyond the capability of the type engine to prove. Given concrete types for R, S

and T, it could expand both expressions to the same nest RHs, but, here in the depths of our transducer,

it doesn't know the concrete types.

They are, however, generally known at the time that compose is called, where

can force the compiler to check our math by using a type constraint.

We'll add an implicit instance of the =:= class to the compose declaration,

along the lines of.

(implicit ok : T#Concat[S]#Wrapped[R] =:= S#Wrapped[T#Wrapped[R]])

which is especially good fun as we can use infix form. The compiler will now alert us if these two types for some reason aren't identical in a particular concrete instance.

Of course, we won't know R until apply time, but our primary concern is that all the

fiddly wrapping works out, which is really independent of R, so we'll use Any instead.

def compose[C,T <: SList](t2 : Transducer[C,A,T])

(implicit ok : T#Concat[S]#Wrapped[Any] =:= S#Wrapped[T#Wrapped[Any]]) : Transducer[C,B,T#Concat[S]] = {

val t1 = this

new Transducer[C,B,T#Concat[S]] {

def apply[R](rf: RFn[C,R]) : RFn[B,(T#Concat[S])#Wrapped[R]] =

t1(t2(rf)).asInstanceOf[RFn[B,T#Concat[S]#Wrapped[R]]]

}}

def ∘[C,T <: SList](t2 : Transducer[C,A,T])(implicit ok : T#Concat[S]#Wrapped[Any] =:= S#Wrapped[T#Wrapped[Any]]) = compose[C,T] (t2)

def ⟐[R] = apply[R] _

The cast is still necessary, but it feels a bit safer now. (If anyone knows how to avoid the cast entirely, I'd love to hear it...)

We can now write a new deduplicator, totally free of vars and side-effects,

that passes along state to itself by

wrapping it up with the succeeding reduction value and declares its

intention to do so with the wrapping directive type SCons[A,SNil]:

def t_dedup[A] = new Transducer[A,A,SCons[A,SNil]] {

def apply[R](rf : RFn[A,R]) : RFn[A,RH[R,A]] = {(rw,a) =>

rw match {

case RH(r,None) => RH(rf(r,a),Some(a))

case RH(r,Some(a0)) => if (a==a0) rw else RH(rf(r,a),Some(a))

}

}}

The standard factories for simple mappings and filterings are essentially

identical to the state-unaware versions, because SNil::Wrapped[R] is just R:

def map[A, B](f: A => B): Transducer[B, A, SNil] = new Transducer[B, A, SNil] {

def apply[R](rf: RFn[B, R]) : RFn[A,R]= (r, a) => rf(r,f(a)) }

def filter[A](p: A => Boolean): Transducer[A, A, SNil] = new Transducer[A, A, SNil] {

def apply[R](rf: RFn[A, R]) : RFn[A,R]= (r, a) => if (p(a)) rf(r,a) else r }

The state of statelessness

The last, somewhat annoying, piece we're going to need is a way to wrap up the initial

reduction value in layers of RH(...,None) and then to unwrap the final reduction.

def wrap[R,S<:SList](r:R): S#Wrapped[R]

def unwrap[R,S<:SList](fr : S#Wrapped[R]) : R

The reason these are annoying is that the object wrapping naturally follows the type wrapping, but we don't have a particularly beautiful way of accessing the wrapped type at runtime. Instead, I think, we have to use the unbeautiful workaround of manifests, which are always introduced with the warning that they're experimental and might be removed from Scala some day but for now you should look at the source file.

If we add to wrap an (implicit ms : Manifest[S])

then an instance of ms will be available at runtime, built according

to a recipe captured at the time of compilation. From the manifest, you

can extract quit a lot of information, but for our purposes, the most important

bit is that typeArguments will return a list of manifests corresponding

to type parameters.

We expect Manifest[SCons] to have two type parameters, of which the second

is Manifest[SList], and Manifest[SNil] to have no type parameters.

When the following function is called as sdepth[S], it will implicitly receive

a complete manifest of the nested SList; then it will call itself recursively

with successively peeled back manifests, counting up the number of layers:

def sdepth[S <: SList](implicit ms : Manifest[S]) : Int = ms.typeArguments match {

case Nil => 0

case _ => sdepth(ms.typeArguments(1).asInstanceOf[Manifest[S]]) + 1

}

We can now use sdepth to direct the wrapping and unwrapping festivities. There

are still casts that I wish we could get rid of, but I don't see how:

def wrap[R,S<:SList](r:R)(implicit ms : Manifest[S]): S#Wrapped[R] = {

sdepth[S] match {

case 0 => r.asInstanceOf[S#Wrapped[R]]

case n => {

var x : Object = RH(r,None)

for(i <- 1 until n) {x = RH(x,None)}

x.asInstanceOf[S#Wrapped[R]]

}

}

}

def unwrap[R,S<:SList](fd : S#Wrapped[R])(implicit ms : Manifest[S]) : R = {

var x : Any = fd

for(i <- 1 to sdepth[S]) {x = x.asInstanceOf[RH[AnyRef,AnyRef]].r}

x.asInstanceOf[R]

}

The standard sequence function is now a one-liner (assuming that type declarations

don't count, which would in fact be a bit silly in discussion of type programming):

def sequence[A,B,S <: SList : Manifest](tr: Transducer[B,A,S], data: Seq[A]) : Seq[B] =

unwrap (data.foldLeft(wrap(data.companion.empty[B]))(tr {_ :+ _}))

It does quite a lot:

- It initializes an empty

Seq[B]of the same container type as the inputSeq[A], wraps it up as anS#Wrapped[Seq[B]]- transduces the

(Seq[B],B) => Seq[B])append function_ :+ _ - into a function

(S#Wrapped[Seq[B],A) => S#Wrapped[Seq[B] - reduces (folds) into an

S#Wrapped[Seq[B]], which it - unwraps it into a

Seq[B].

Along the same lines, it will be handy to have a state-aware foldLeft to do

the same sort of prep work for general reductions:

class FoldableSA[A](as:Seq[A]) {

def foldLeftSA[B,S<:SList:Manifest](z:B)(rf: RFn[A, S#Wrapped[B]]) =

unwrap(as.foldLeft(wrap(z))(rf))

}

implicit def toFoldableSA[A](as:Seq[A]) : FoldableSA[A] = new FoldableSA[A](as)

Rock and Roll

Let's verify that we don't have the same hidden state problems as the previous implementations:

val t_parsei: Transducer[Int, String, SNil] = map(_.toInt)

val t_root2 : Transducer[Double, Int, SNil] = map(i => Math.pow(2.0, 1.0 / i))

val t = t_parsei ∘ t_dedup[Int]

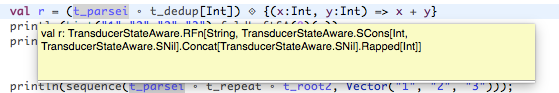

val r = (t_parsei ∘ t_dedup[Int]) ⟐ {(x:Int, y:Int) => x + y}

Hovering over r in Eclipse shows the expected function type:

And,

scala> println(sequence(t,List("1","2","2","3")))

List(1, 2, 3)

scala> println(List("1","2","2","3").foldLeftSA(0)(r))

List(3, 2, 1)

scala> println(sequence(t,List("3","2","1")))

6

scala> println(List("3","2","1").foldLeftSA(0)(r))

6

Tada!

Well then

I think this came out pretty well. State is where it should be. Type of state, type of reduction and type of transduction are specified strongly and, while I'm no Scala stylist, the complexity from a user's standpoint is not overbearing.

It is satisfying that the conceptual manner in which transduction type operates on reduction type can be realized in Scala, and the techniques employed seem quite general. On the other hand, they also feel a bit tricky, as if we're getting away with something the language doesn't naturally support, and I don't understand the bounds of their applicability. (It's clearly time to delve into shapeless, which is fortunate because I was running out of excuses for avoiding Haskell.)

It does not seem advisable to attempt this sort of type transmutation in core.typed;

on the other hand, it appears alluringly simple in schema, where

you get an entire Turing-complete language for type analysis.

Comments

comments powered by Disqus